The rise of online education and massively open online courses (MOOCs) have prompted much naysaying on their effectiveness, with detractors citing single-digit completion rates and short-lived pilot programs.

Amidst all the arguments about “flipped classrooms” and “hybrid learning,” however, few people have actually analyzed what makes MOOCs work (or fail): the content. Online learners spend most of their time watching videos — but are the videos any good?

This year edX, the online learning platform co-run by MIT and Harvard University, gave researchers at MIT’s Computer Science and Artificial Intelligence Lab (CSAIL) data on the second-by-second viewing habits of more than 100,000 learners perusing more than 6.9 million video sessions.

In a paper published this spring, the CSAIL team outlined some key findings on what online learners want from videos. These include:

- Brevity (viewers generally tune out after six minutes)

- Informality, with professors seated at a desk, not standing behind a podium

- Lively visuals rather than static PowerPoint slides

- Fast talkers (professors seen as the most engaging spoke at 254 words per minute)

- More pauses, so viewers can soak in complex diagrams

- Web-friendly lessons (existing videos broken into shorter chunks are less effective than ones crafted for online audiences)

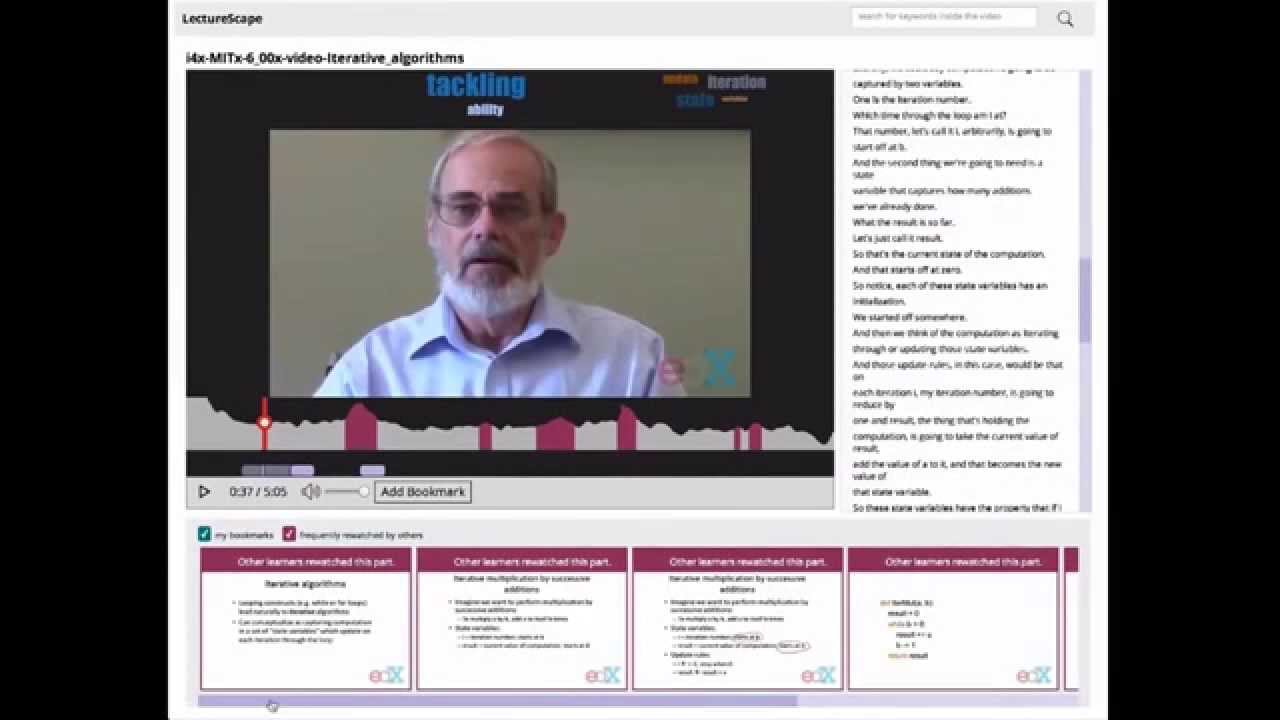

These insights form the basis of the CSAIL team’s LectureScape, a “YouTube for MOOCs” that seeks to reinvent how online learners watch videos.

LectureScape uses data on viewing behavior — particularly the “interaction peaks” that correspond to points of interest or confusion — to present MOOC videos in a way that’s more intuitive, dynamic, and effective:

- A timeline shows which parts other users have most frequently watched

- An interactive transcript lets users enter keywords to find relevant segments

- A mechanism automatically creates word clouds and summaries of individual sections, as well as the whole presentation

- Content from popular slides automatically appears in the following slide, as users will likely want to refer back to that information

In summary, viewers can consume videos more efficiently, skipping specific sections or repeating trickier ones, without having to slog through the whole video.

Juho Kim, a graduate student in electrical engineering and computer science, says that the group’s previous work on the tutorial-focused platform ToolScape (PDF) demonstrated that users learn more effectively with this type of interface. He says that traditional MOOC metrics, such as completion rates, are “too simplistic,” and don’t account for the many learners seeking specific skills (versus intending to formally finish a course).

LectureScape was developed by Kim alongside former postdoc Philip Guo; EECS graduate students Carrie Cai and Shang-Wen Li; EECS professor Rob Miller; and Harvard's Krzysztof Gajos. Kim will present the research at the ACM Symposium on User Interface Software and Technology (UIST) in Honolulu in October.

Kim says the next steps for LectureScape include personalized lecture-video recommendations, in the style of Netflix, as well as “on-demand expansion,” which includes links to relevant videos to clarify potentially confusing topics.

He also hopes to implement the tool on a larger scale to quantify its effect on student engagement and performance. The technology can be easily applied to existing MOOC content, as the tool uses machine learning to automatically segment videos based on visual cues.