Researchers at the MIT Media Laboratory are developing a system that enables young children to program interactive robots by affixing stickers to laminated sheets of paper.

Not only could the system introduce children to programming principles, but it could also serve as a research tool, to help determine which computational concepts children can grasp at what ages, and how interactive robots can best be integrated into educational curricula.

Last week, at the Association for Computing Machinery and Institute of Electrical and Electronics Engineers’ International Conference on Human-Robot Interaction, the researchers presented the results of an initial study of the system, which investigated its use by children ages 4 to 8.

“We did not want to put this in the digital world but rather in the tangible world,” says Michal Gordon, a postdoc in media arts and sciences and lead author on the new paper. “It’s a sandbox for exploring computational concepts, but it’s a sandbox that comes to the children’s world.”

In their study, the MIT researchers used an interactive robot called Dragonbot, developed by the Personal Robots Group at the Media Lab, which is led by associate professor of media arts and sciences Cynthia Breazeal. Dragonbot has audio and visual sensors, a speech synthesizer, a range of expressive gestures, and a video screen for a face that can assume a variety of expressions. The programs that children created dictated how Dragonbot would react to stimuli.

“It’s programming in the context of relational interactions with the robot,” says Edith Ackermann, a developmental psychologist and visiting professor in the Personal Robots Group, who with Gordon and Breazeal is a co-author on the new paper. “This is what children do — they’re learning about social relations. So taking this expression of computational principles to the social world is very appropriate.”

Lessons that stick

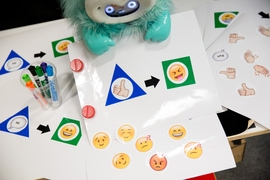

The root components of the programming system are triangular and circular stickers — which represent stimuli and responses, respectively — and arrow stickers, which represent relationships between them. Children can first create computational “templates” by affixing triangles, circles, and arrows to sheets of laminated paper. They then fill in the details with stickers that represent particular stimuli — like thumbs up or down — and responses — like the narrowing or widening of Dragonbot’s eyes. There are also blank stickers on which older children can write their own verbal cues and responses.

Researchers in the Personal Robotics Group are developing a computer vision system that will enable children to convey new programs to Dragonbot simply by holding pages of stickers up to its camera. But for the purposes of the new study, the system’s performance had to be perfectly reliable, so one of the researchers would manually enter the stimulus-and-response sequences devised by the children, using a tablet computer with a touch-screen interface that featured icons depicting all the available options.

To introduce a new subject to the system, the researchers would ask him or her to issue an individual command, by attaching a single response sticker to a small laminated sheet. When presented with the sheet, Dragonbot would execute the command. But when it’s presented with a program, it instead nods its head and says, “I’ve got it.” Thereafter, it will execute the specified chain of responses whenever it receives the corresponding stimulus.

Even the youngest subjects were able to distinguish between individual commands and programs, and interviews after their sessions suggested that they understood that programs, unlike commands, modified the internal state of the robot. The researchers plan additional studies to determine the extent of their understanding.

Paradigm shift

The sticker system is, in fact, designed to encourage a new way of thinking about programming, one that may be more consistent with how computation is done in the 21st century.

“The systems we’re programming today are not sequential, as they were 20 or 30 years back,” Gordon says. “A system has many inputs coming in, complex state, and many outputs.” A cellphone, for instance, might be monitoring incoming transmissions over both Wi-Fi and the cellular network while playing back a video, transmitting the audio over Bluetooth, and running a timer that’s set to go off when the rice on the stove has finished cooking.

As a graduate student in computer science at the Weizmann Institute of Science in Israel, Gordon explains, she worked with her advisor, David Harel, on a new programming paradigm called scenario-based programming. “The idea is to describe your code in little scenarios, and the engine in the back connects them,” she explains. “You could think of it as rules, with triggers and actions.” Gordon and her colleagues’ new system could be used to introduce children to the principles of conventional, sequential programming. But it’s well adapted to scenario-based programming.

“It’s actually how we think about how programs are written before we try to integrate it into a whole programming artifact,” she says. “So I was thinking, ‘Why not try it earlier?’”