Last summer, MIT researchers published a paper describing an algorithm that can recover intelligible speech from the analysis of the minute vibrations of objects in video captured through soundproof glass.

In June, at the Conference on Computer Vision and Pattern Recognition, researchers from the same groups will describe how the technique can be adapted to infer material properties of physical objects, such as stiffness and weight, from video.

The technique could have application in the field of “nondestructive testing,” or determining materials’ physical properties without extracting samples from them or subjecting them to damaging physical tests. It might be possible, for instance, to identify structural defects in an airplane’s wing by analyzing video of its vibration during flight.

“One of the big contributions of this work is connecting techniques in computer vision to established theory on physical vibrations and to a whole body of work in nondestructive testing in civil engineering,” says Abe Davis, an MIT graduate student in electrical engineering and computer science who, together with fellow graduate student Katie Bouman, is first author on the paper. “We make this connection pretty explicitly in the paper, which is I think where a lot of the future potential lies, because it bridges these fields.”

Davis, Bouman, and their coauthors — their advisors, respectively, professors of computer science and engineering Fredo Durand and Bill Freeman; Justin Chen, a graduate student in civil and environmental engineering; and Michael Rubinstein, who completed his PhD with Freeman but is now at Google Research in Cambridge, Massachusetts — applied their technique to two different types of object. One was rods of fiberglass, wood, and metal; the other, fabrics draped over a line.

In the case of the rods, they used a range of frequencies from a nearby loudspeaker to produce vibrations. And since the vibrational frequencies of stiff materials are high, they also used a high-speed camera — as they did in some of the visual-microphone work — to capture the video.

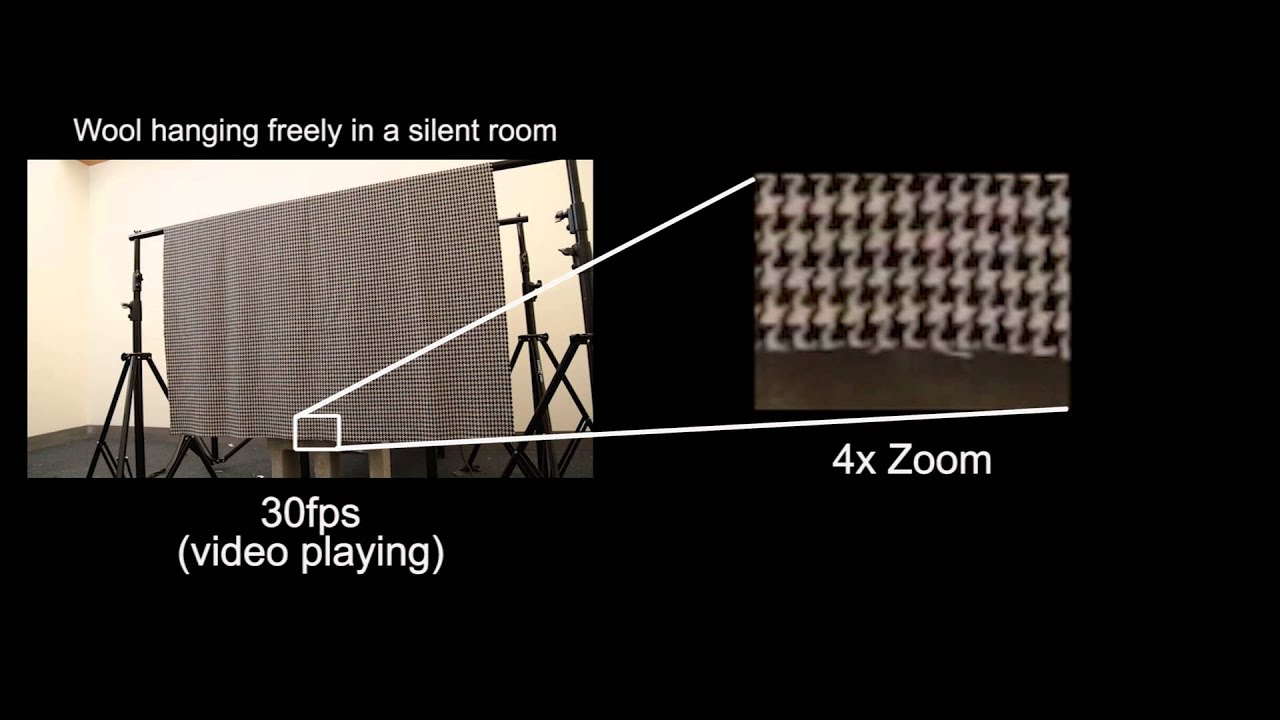

The fabrics, however, were flexible enough that the ordinary circulation of air in a closed room was enough to produce detectible vibrations. And the vibration rates were low enough that they could be measured using an ordinary digital camera.

Although its movement may be undetectable to the human eye, a vibrating object usually vibrates at several frequencies at the same time. A given object’s preferred frequencies, and the varying strength of its vibrations at those frequencies, produce a unique pattern, which a variation on the visual-microphone algorithm was able to extract.

The researchers then used machine learning to find correlations between those vibrational patterns and measurements of the objects’ material properties. The correlations they found provided good estimates of the elasticity of the bars and of the stiffness and weight per unit area of the fabrics. (Stiffness is a measure that factors in both elasticity, a property of a material, and the geometry of the object made from that material. The researchers knew the precise geometry of the rods but not that of fabrics, which varied somewhat in size.)

Moreover, aberrations or discontinuities in an object’s typical vibrational patterns could indicate a defect in its structure. Identifying those types of correlations is the subject of the researchers’ ongoing work.